The Government approves the norm for the good use of AI, which forces to label content created with this technology | Technology

The Council of Ministers today approved the draft law for ethical, inclusive and beneficial use of artificial intelligence (AI), which aims to put order in the practical application of this technology. The draft adapts to our legislation what is established in the European Regulations for Artificial Intelligence, agreed by community institutions on December 8, 2023 and approved by the European Parliament on March 13 of last year. “AI is a very powerful tool, which can be used to improve our lives or to Disseminate bulos and attack democracy”, The Minister of Digital Transformation and Public Function, Oscar López, said at a press conference.

As the minister recalled, the European regulations « defend our digital rights, identifying prohibited practices. » For example, it considers severe infraction not to comply with the labeling of texts, videos or audios generated with AI. “We are all likely to be subject to such an attack. They are called Deepfakes And they are prohibited, ”said López.

Last week, The PP spread in X a video made with the generative The island of corruptions. In the video, the president of the Government, Pedro Sánchez, his wife, Begoña Gómez, and other personal (former minister José Luis Ábalos, his ex -partner Jessica Rodríguez and his advisor Kold reality show The island of temptations. The video was retired after the protests of the Foreign Ministry of the Dominican Republicthat considered it « an attack » against the country. When the draft law presented today presented today, videos such as that must be marked so that they do not doubt that the images have been generated by computer.

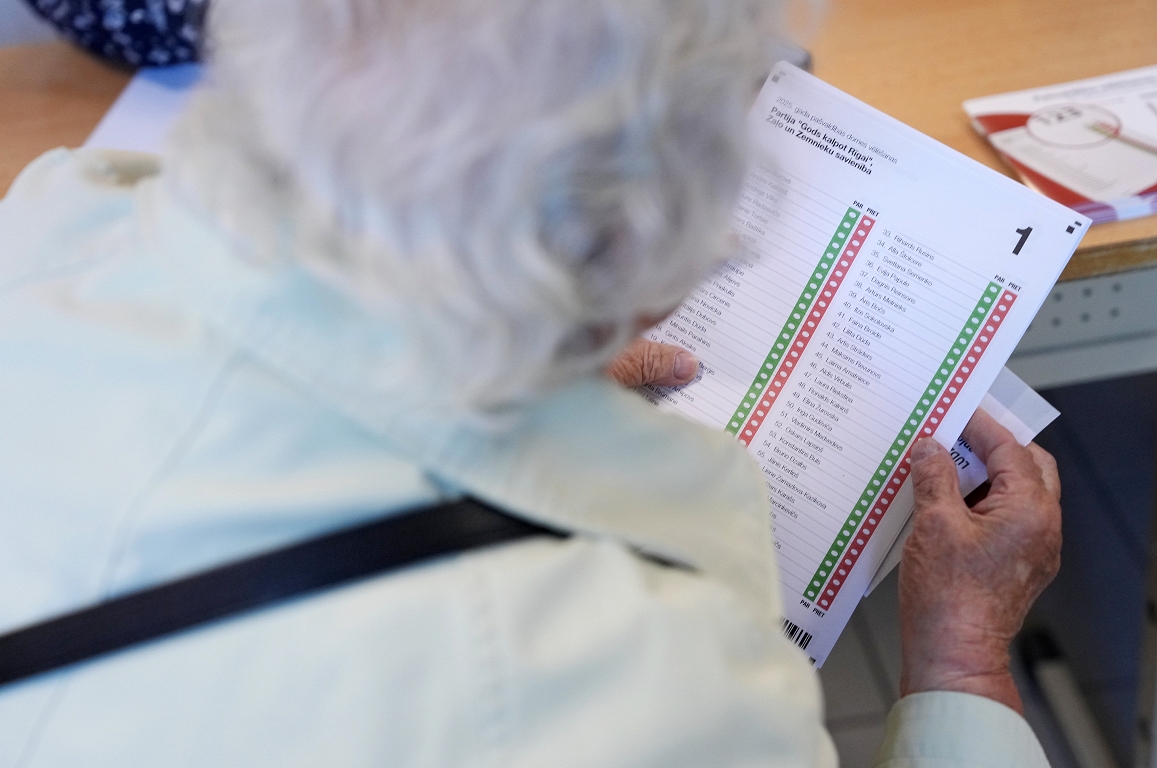

However, the minister has not specified how that labeling should be made, beyond what the European regulation already indicated: that the Deepfakes They must identify « clearly and distinguishablely at the latest on the occasion of the first interaction or exposure. » “When the regulations are developed, The Spanish Agency of supervision of artificial intelligence (Aesia) will put the norms, ”said López. Who will control that they are fulfilled? The management and processing of biometric data will be the responsibility of the Spanish Agency for Data Protection (AEPD); The AI systems that may affect democracy will be the responsibility of the Central Electoral Board; and of the General Council of Judiciary who may influence the application of justice.

The rest of the cases will be studied by AESIA. Constituted last year and based in A Coruña, the agency is directed by Ignasi Belda and plans to hire up to 2026 at least 80 experts from various disciplines that must review the adaptation of different applications from AI to the regulations.

López recalled that, as established by the European AI Regulations, who fail to comply with the regulations will face fines of up to 35 million euros and/or between 5% and 7% of world billing.

EUROPEAN FRAMEWORK

The European Regulation of AI, considered the most advanced in the world in this matter, has a pioneering approach. With the objective of not becoming obsolete, the regulation classifies the different applications of available the AI based on their risk and, depending on this, establishes different requirements and obligations. These range from unrestricted use, for example, a filter of spam or a content recommender, until the total prohibition. These cases, more serious, refer to those applications « that transcend the awareness of a person or deliberately manipulative person », which exploit their vulnerabilities or those that infer emotions, race or political opinions of people.

Between both ends are the so -called « high risk » technologies, subject to permanent supervision. Remote biometric identification systems are framed in this category, which a wide sector of Parliament wanted to prohibit strictly; Biometric categorization systems or the recognition of emotions. Also the systems that affect the safety of critical infrastructure and those related to education (behavior evaluation, admission systems and exams), employment (personnel selection) and the provision of essential public services, the application of the law or the management of migration.

The regulation also establishes that periodic reports must be made to update this classification, so that, when new applications not contemplated in the document arise, it is determined in which category it is framed (use without restrictions, use subject to restrictions or prohibition).

That happened, for example, with the generative AI, the technology behind tools such as Chatgpt: its emergence occurred when the negotiation of the regulation was already very advanced. It was discussed whether to make a specific mention of it or not. Finally, it was included and established that the so -called foundational models will have to fulfill transparency criteria, such as specifying whether a text, a song or photography have been generated through AI, as well as ensuring that the data that has been used to train the systems respect the copyright.

The document did not establish, however, how this signage of the contents prepared by AI should be carried out, or how to ensure that they respect the copyright. These two key issues have not been specified in the draft presented today.