On the Ray-Ban Meta comes destination ai, simultaneous translation and information on what you are looking at: how it works

With an update of the Meta app to the smart glasses produced by Luxottica and the Zuckerberg company acquire intelligent features also in Italy. But they still struggle to be really useful

I am Smart glasses But until a few months ago there was little « intelligent ». THE Ray-Ban Meta, Products from Essilorluxottica together with Metaintegrated the first features of Meta ai, In Italy, last November. It was more than anything else than a vowel assistant which answered our questions asked through the microphones hidden in the auctions. The commands are activated by pronouncing the sentence « Hey Meta»And that point we could ask for some » general « questions. Like the best restaurants in a city, advice for a birthday gift. A little more. Behind, there is the model of artificial intelligence generative goal, which so far was a little « crippled » in our country due to the failure to comply with European regulations and in particular with the GDPRthe law on personal data. The release, throughout Europe, It took place in March. The chatbot appeared on all the platforms of the company – WhatsApp (No, that blue ball cannot be disabled), Instagram And Fabbobook – and the old app Meta View (To use the Ray-Ban Meta) has changed its name to the destination. Returning to our glasses, for a few days it has been made available an update which also brings new features to this device. Being a device to wear, generative artificial intelligence really becomes An assistant who can help us while we walk, observe, we speak. Or at least, so it should be in the intentions.

Interaction with what we are looking at

The most interesting function that comes on the Ray-Ban Meta is the possibility of ask questions and receive information about what we are looking at. When it is said to Meta to the « Look», The glasses take a photo of what the cameras in the auctions are framing, which is then used for Analyze the place or object portrait based on our requests.

We have done some tests, to understand if really destination to (and its answers) can be really useful in different situations. As a tool for those with visual difficulties, they are an excellent ally: We asked to read the opening hours of a bank or to identify how it sells a shop and can give correct information. For those who seek more details on a particular building, on a monument or on a square, we are still not there. To identify a destination for asking to ask Activate « always » geolocation in the settings, but despite this it is unable to detect what we are observing with precision or where we are. He can know the city, the area – we were in via Solferino, we were told that we were in Milan in zone 1 – and little else. Looking at the Corriere della Sera building he replied: « It seems to be a historic building With elegant architecture and decorative details ». Cannot even give indications on road routes. Asking where he brings the bus we are observing he replies: « I still can’t help you with this, but hyparo new things every day ». If you ask Milan from Milan, how you can reach Como, the answer is the same.

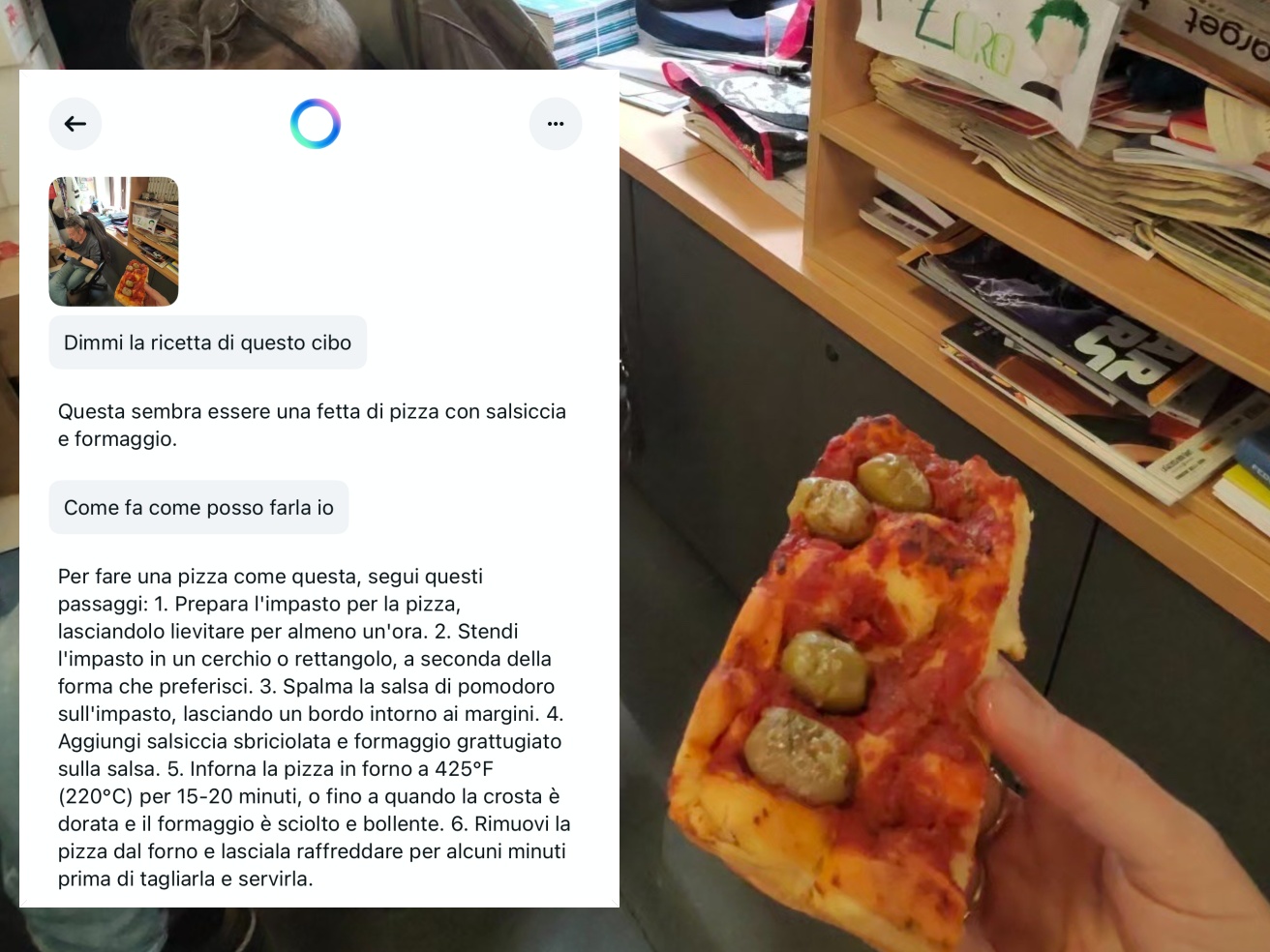

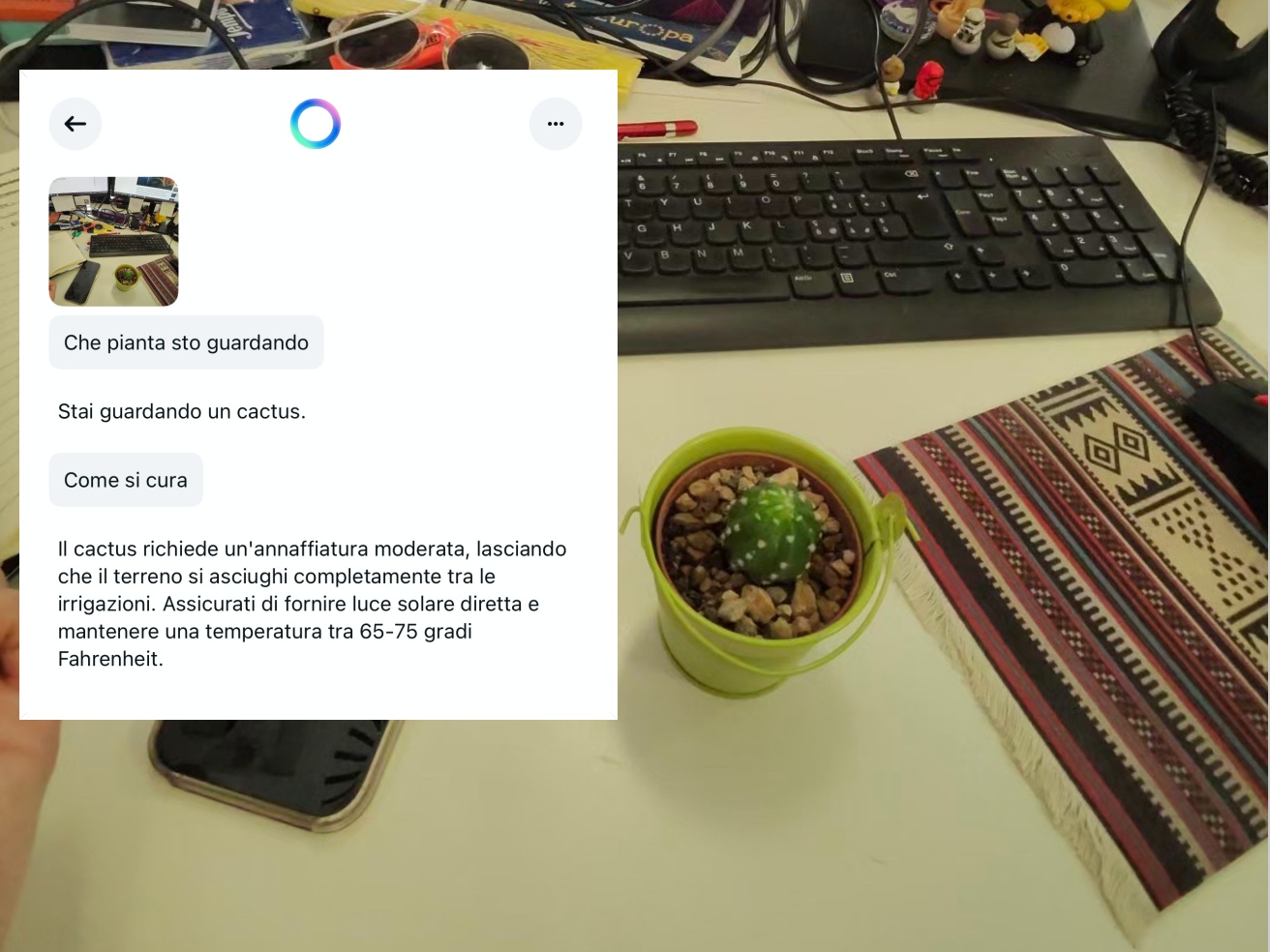

Also as regards questions and doubts about particular objects, destination ai – through the sensors of the ray -ban destination – it is very generic and not particularly useful. We framed a scooterasking for the brand: « It seems to be a model of Xiaomi ». No, it’s NintBot. Then we looked towards a slice of pizza seasoned with olives. By goal AI seems instead « a slice of pizza with sausage and cheese ». To the request to give us the recipe, he replied correctly but in a rather « robotic » way. Still, we’re looking at now a plant. He understands that we are « looking at a cactus ». « How do you care? » We add. Generic information, without considering the size of the plant or the context in which it is located: «It requires moderate watering, letting the ground dry completely among the irrigation. Make sure to provide direct sunlight and maintain a temperature between 65-75 Fahrenheit degrees». Although we are in Italy and we are talking in Italian, it gives us an American measuring unit. The beginning is this, but the generative evolves as it is used. And therefore the forecast is that in the coming months – with more and more users who test like this and models that are trained on the data that are accumulated – the answers best.

Simultaneous translations

The simultaneous translations have also arrived on the ray-bags. For the moment There are four supported languages: English, Spanish, French and Italian. For each language, it must be awaited that the relative data package on the app is downloaded. Also because, for the translation it is necessary keep the app open in the relative section. We must select the idiom that we speak, that we wear the glasses, and that of our interlocutor. When the conversation is started, Through the speakers in the auctions we can listen to the translation. While those who are talking to us can read the translation on the screen or we can activate the « vocal » version manually for each sentence. Here too it’s all a little still in the making: We did an Italian-English test. The context and the sense of the conversation is understood, but the translation is not decidedly excellent.