In 2027 the « artificial superintelligence » will exceed humans (and we will risk extinction): the predictions of a former Openii researcher

According to a study conducted Daniel Kokotajlo, who left Altman’s company in 2024, and by the best worldwide expert, there is no shortage before the AI models improve alone and become so advanced as to think only of their interests

October 2027. Humanity is at a crossroads. On the one hand, slow down the development of artificial intelligence To contain the risk that one day he rebels against humans. On the other, continue with a policy of Laissez Faire For keep up with Chinese technological advances. One of these scenarios leads to Destruction of humanitythe other to one intergalactic civilization based on peace and harmony. The turning point in this story took place just a month earlier – in just a year and three months compared to when we write – when it arrives A I who will be smarter than the best of humans.

Dystopian novel? Catastrophist forecast? In reality it is one study conducted by some researchers and published with the name of « To 2027 ». Among the authors also appears a researcher who has always had something to say about the safety of artificial intelligence: Daniel Kokotajlowhich in 2024 He had left Openai Because he had « lost confidence on the fact that Openai will behave responsibly ».

The « artificial superintelligence »

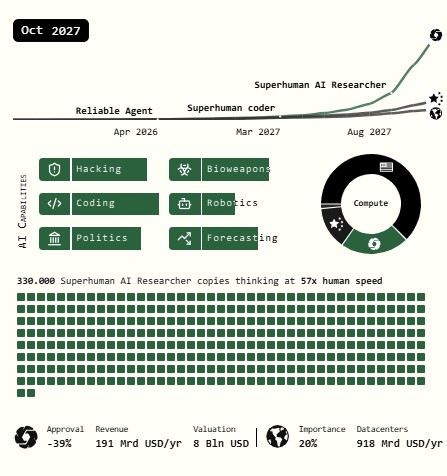

In the long « fictional research », a fictitious company called OpenBrain – The name recalls the union of Openai – It will develop a series of artificial intelligence models designed only for research and development purposes. In exponential growth, at each technological leap the previous model will train the next one. In this scenario, the researchers describe that in just over two years you could get to an alleged « agent-4 », such an advanced artificial intelligence that « a single copy of the model, working at human speed, will already be qualitatively better than any human in research on artificial intelligence». Even then the advancement can be seen in all strategic fields: from programming to hacking, from the creation of biological weapons to the ability to make political decisions.

In 2027 he will concentrate The explosion of artificial intelligence. In March we will already arrive at the « superhuman programmer », an artificial intelligence system that can replace the best of the code developers. In August, however, the « researcher on the superhuman AI », that is, a model capable of dominating all the fields of knowledge. In November, « researcher on superintelligent AI », who will be « far better than the best human researcher in the research on artificial intelligence ». And in December 2027, finally, we will arrive at « Artificial Superintelligence »« An artificial intelligence system that will be much better than the best human in every cognitive task ».

The misalignment of the objectives

The problem, in this dystopian future, is that the Ai will move away from the « specs », that is, the model specifications that define « a written document that describes the objectives, the rules, the principles that should guide the behavior»Artificial intelligence. Also because of a training based on positive reinforcement, the « agents » of the study will move away from the long -term lens defined by humans to go in search of a « personal satisfaction ». In this case we speak of « misalignment » of the AI compared to our goals.

“As the models become smarter, they become increasingly good at deceiving Human beings to obtain rewards, « reads the study-narrative. « Before starting training on honesty, sometimes completely falsify the data. With the continuation of the training, the rate of these accidents decreases. Or agent 3 has learned to be more honest, or He became better to lie».

The two possible decisions

According to the « narrative » part of the research, as humanity we will find ourselves in front of two possibilities and, consequently, Two possible consequences. After a journalistic scandal, where it will be highlighted that « Agent-4 » is now out of any human control and thus risks putting our survival at risk, OpenBrain and the American government will have to decide if pause any advancement – And indeed, return to previous and less dangerous versions of artificial intelligence – or whether to continue developing technology Also to counteract the Chinese advance, which according to the forecasts will always be a few months away from the knowledge and skills reached in the USA.

Almost like a game book, on the site you can choose your favorite « final ». That of the slowdown provides for the establishment of a Supervision committee which will facilitate the creation of models set not on competition with the Asian giant but rather on collaboration. Ultimately, this path will lead to widespread well -being and the colonization of other planets.

On the contrary, the scenario of a « race for artificial intelligence » sees a gray future, indeed, black: continuing to self-vail and move away more and more from the objectives of the model specifications, artificial intelligence will end up destroying humanity With the sole objective of preserving itself.

Who conducted the research

Telling this, it seems, in fact, a novel. But behind the compelling narrative there is a real forecast supported by expert studies.

One of these is the aforementioned Daniel Kokotajlo. In 2022 he had entered Openai as as governance researcher. His goal was to predict the progress of artificial intelligencebut even then he had not shown great optimism. So much so that, after asking Sam Altman himself to focus Openai’s attention on the safety of artificial intelligence systems, he chose to leave his position when he realized that The company was aiming exactly in the opposite direction.

Among the authors of the text – which, beyond the fictional part, It abounds with notes and sources just like a scientific study – It appears too Eli Liflandresearcher in the AI sector and gold medal of forecasts for Rand Forecasting Initiative, a platform that collects the Forecasts of professional analysts In order to help the « policy makers » make decisions on complex scenarios. In short: those who participate in the ranking make predations on possible future events. The more precise (and far in time) the esteem, the better the position in the standings will be the better. Lifland made a name with his Analysis on the exponential increase in tidues from Covid-19 to February 2020much earlier than when the whole world understood in which direction the epidemic was going.

:format(webp)/s3/static.nrc.nl/images/gn4/stripped/data133314127-765aec.jpg)